With 18 apps on the Atlassian Marketplace, eight versions of Confluence, several database systems and 30 A4 pages of acceptance tests - there's a lot to do.

Why should you use UI tests? How did we install automated tests in our infrastructure? Where were the hurdles and how did we solve them? We'll try to answer all of these questions in this blog post.

The problem

As an app developer for Confluence with more than 18 active apps available in the Atlassian marketplace, we faced a big challenge before every release. We had to check manually if our apps were still compatible with all of the supported Confluence versions. At this point (as of January 2018) there are eight versions of Confluence that are officially supported by Atlassian.

We have to make sure that all of our apps work properly with every single supported version of Confluence. While in development, we mostly concentrated on the latest version, but when we wanted to release an app, we had to check the entire set of versions. It's not just different Confluence versions that can cause problems, but also different database systems can, and have to be tested separately.

To ensure the highest quality standards, it is necessary to run all of our acceptance tests. For a regular app this might be more than 30 A4 pages! You can easily imagine the effort needed to do this - especially if you have to test the app with every supported version of Confluence and each different database system.

The consequences

We had two options to solve this problem: either take one week to test the app, which means customers have to wait longer for an update or skip some version combinations and take the risk of bugs slipping through. In the past, we often took the second option - fortunately, mostly without big problems. But you aren't comfortable with such a release, knowing that you did not test as thoroughly as you could.

This bad feeling transfers directly into development, because with every new feature the complexity increases and therefore so do potential sources of error. This slows down development and otherwise necessary refactoring might be skipped out of the fear of adding new errors to old code.

The vision

Acceptance tests run automatically - as if an actual user sits in front of the screen and clicks through the application. For every combination of supported Confluence version and database system there's a clean test environment. These tests can be executed both locally and in a continuous integration environment (Bamboo or Jenkins).

With this solution there us added value, not only for developers and quality assurance staff, but also product owners and designers. In the best case, you can reproduce bugs in a clean, isolated test environment which is as similar as possible to a customer's system.

There are three components: a configurable test environment, and automated acceptance tests, to be executed in a continuous integration environment.

The solution...

Based on the Docker solution, we use the Protractor framework to provide the functionality to run automated user interface tests (end-to-end-testing). These tests open a browser and click through the interface, just like a real user would do. HTML elements hidden from a user can also not be seen by Protractor. The tests themselves are written with the JavaScript testing framework Jasmine, which most JavaScript developers are familiar with. We are able to run the test invisibly, or watch as the browser does its job. For every test case, a screenshot is taken to analyse the tests afterwards.

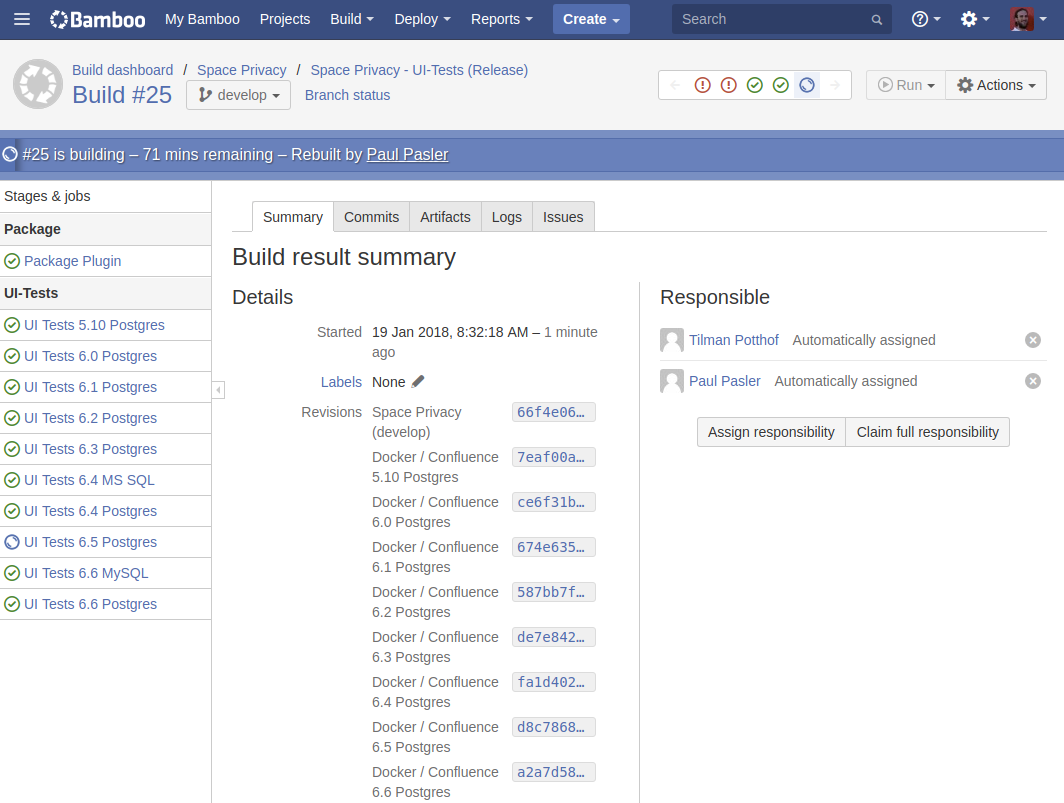

We have used Bamboo for a long time as our build server and for continous integration. The installation of Docker and Protractor was tricky (I'll write more about that in a future article), but successful in the end. For each app there's a Bamboo build plan, which runs at least once for every Confluence version and database system. Besides the stable released versions of Confluence, these tests can be executed with release candiates or beta versions, to discover compatibility problems before the new Confluence version is released by Atlassian. The introduction of Bamboo's configuration as code reduced the effort needed to manage the supported versions for each app.

...to all problems?

Despite the improvements with user interface tests described above, not all problems were solved and new challenges appeared. Protractor does fully act as a real user. Many actions are unrealistically fast, which leads to problems with asynchronous tasks and failed tests. Furthermore, there's the initial hurdle of implementing user interface tests in such a complex web application like Confluence.

To counter these problems, we implemented the 'Confluence Protractor Base', which encapsulates the basic Confluence user tasks like logging in or the creation/deletion of users, spaces and pages. In addition, there are helper functions which wait for asynchronous elements or calls. Since July 2017, this is published as open source on Github (confluence-protractor-base).

So far, so good: Even with automated functional testing, you can't fully replace manual testing. You can only test what you already know, you can't test for undiscovered bugs. That's why all of our apps are still tested by hand, and are installed at least for two days on our internal productive system (Dogfooding).

What's next?

Currently we are working to stabilize our user interface test suite and expand the automation, which means improving the configuration of the Docker setup and the Bamboo system, as well as the reliability of the user interface tests themselves. One of our tasks covers the execution of tests on cloned productive systems. Furthermore we want to improve the performance of the tests: depending on the number and complexity of the tests, they need more than an hour to finish, which prevents us from running them more often.

One of our main goals is to improve the usability of the Confluence Protractor Base to help developers get started with the tool. We'd love to get feedback to help us improve the Confluence Protractor Base, which is why we have written a short tutorial to show you how to use the library.

Lesen Sie diese Seite auf Deutsch

Further information

Quality assurance: Automated front end tests with Selenium IDE

Codeyard: Helping you achieve a successful software development process